Intro to the Visual Effects Process

This page is in sort of a Q & A format, with the intention of giving those unfamiliar with the visual effects industry a bit of an intro as to how things work. This was written based off of questions from a friends' students in NYC, so I am hoping that lots of other people have similar questions and would therefore find this interesting/useful. I am aiming to make this accessible to those not familiar with the standard 3d terminology, so some of the more basic concepts are also explained.

Disclaimer: This was written in 2008, back when I still worked in visual effects. Since then, I’ve moved into the world of feature animation and am not doing much of this process on a daily basis. So, please keep in mind that some of the technical details of file formats may have changed in the time between 2008 and now. Overall the process is still the same, though, so with that in mind, read on!

Starting broad:

This page is in sort of a Q & A format, with the intention of giving those unfamiliar with the visual effects industry a bit of an intro as to how things work. This was written based off of questions from a friends' students in NYC, so I am hoping that lots of other people have similar questions and would therefore find this interesting/useful. I am aiming to make this accessible to those not familiar with the standard 3d terminology, so some of the more basic concepts are also explained.

Disclaimer: This was written in 2008, back when I still worked in visual effects. Since then, I’ve moved into the world of feature animation and am not doing much of this process on a daily basis. So, please keep in mind that some of the technical details of file formats may have changed in the time between 2008 and now. Overall the process is still the same, though, so with that in mind, read on!

Starting broad:

Q: What is the general workflow for the visual effects process?

A: The general workflow can actually vary quite a bit from place to place, mainly depending on the size and number of shots to be completed. Since I personally work at a medium-sized (150 or so people) house, that is only occasionally involved in pre-production, that is what this is based on. Note that some of the largest houses (such at ILM) often are involved very early in the film process with things like pre-visualization and concepting. This is written in the context of a house that mostly receives their assets after shooting has wrapped. With that said, on we go...

The fast answer:

Matchmove

Roto/Paint

Modeling

Rigging (if needed)

Animation

Texture/Shader Work

Lighting/Rendering

Effects

Compositing

The long answer:

The visual effects process begins with a number of ingredients. The first ingredient in the mix is the film or video that was shot. This usually consists of a series of still images (as opposed to movie formats like quicktime) and are referred to as 'plates' (as in film plates). These usually consist of a sequence of digital files that are the digital scans of the original film that was shot. These are the source images and, in theory, the only thing they are missing are the visual effects.

The next ingredient is the concept. This can consist of detailed descriptions, storyboards, concept paintings, and pre-visualization animations (rough animations and effects often done before the film is shot to illustrate what is going on). Alternatively, the concept can consist of no more then a lot of hand-waving by the director and some vague descriptions of how things should 'feel'. This varies pretty widely from show to show.

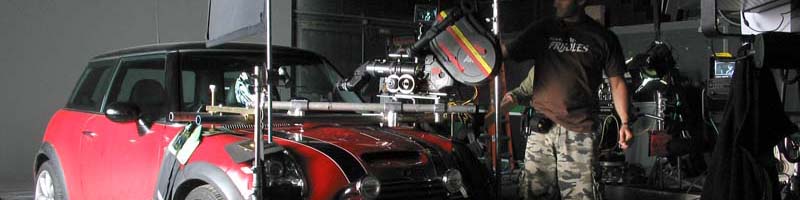

The final ingredients are technical data sorts of things. Things like camera information (lens info, camera positions, etc) to aid in the matchmove process (more on this later), chrome sphere or spherical image sequences (to aid lighting in 3d), set blueprints, props used on set, and any number of other things that can vary a bit show-to-show.

Stu Maschwitz (one of the founders of The Orphanage, where I work) often states that our job is to take the shots we are given, which are missing the dinosaurs, and give them back exactly as they were, but with dinosaurs included. With that in mind, there are some things that need to be done before we can go dropping our dinosaurs into the film plates.

The first step is called matchmove. This is the process by which the camera move (as in the camera the film was shot with) is tracked in 3d space. This process is aided greatly by the camera data mentioned above, tracking markers shot on the film, and measurements taken on set. The end result of this step is a sort of digital camera move that we can use in our 3d packages, which exactly matches the camera move that the film camera made while it was shooting the film. This is a pretty complicated process and if you'd like to learn more, you should check out Tim Dobbert's book, Matchmoving: The Invisible Art of Camera Tracking, as it is the best matchmoving book out there.

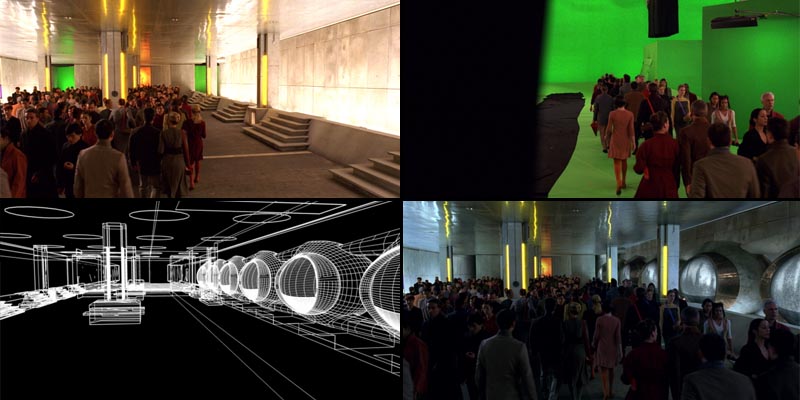

Often, while matchmove is working on their side, the Roto and Paint departments are hard at work cleaning and fixing the plates. The Roto (short for rotoscoping) department does the stuff that allows for the dinosaurs to go behind the plants in the plate or allows us to take the hero of the story (who was shot over green or blue screen) and place them into a whole new environment. The folks in roto do what is called green- or blue-screen 'extractions', the end result of which is a plate with an empty hole (think of a painting on glass, where you see the painting in some areas, but can see through the glass in others) where the green or blue was. In cases where shooting over blue or green was not possible, or where the green or blue was too muddled, the roto folks must do it by hand. This consists of a complicated tracing of the edges of the things to be separated. This tracing, though, also must take into account things like motion blur, camera depth of field, and in very difficult cases, things like hair, where much of the work must be done by hand by a skilled roto artist in order to avoid a strange halo around the hair later.

The Paint department, as mentioned above, is also working to fix and clean up the film plates. Paint work can consist of something as simple as painting out specs of dust on the film (from the scanning process, resulting is white flecks that flash on a single frame), to as complicated as removing whole people/cars/buildings from the shot and painstakingly replacing everything that was behind it. More often though, the day-to-day work of the paint dept is to do things like wire removal (painting out the wires that were holding up and actor or actress), and removal of little mistakes (like a crew member who thought they were hidden behind a set piece, but there hat is actually sticking up in view).

While the above things are going on, the 3d assets (virtual buildings, monsters, environments, etc) are being modeled. This just consists of a sort of virtual sculpting in the computer which results in a virtual representation of an object. There are quite a few ways to go about modeling, each with it's benefits and drawbacks, but the end result is generally about the same: a model to be passed on to the next step in the process.

If the model happens to be a creature/character of some sort, the next step in the process is called Rigging. This is where the stuffed animal (something that has so structure besides the outer skin) gets turned into a marionette (with a skeleton, strings, etc). The riggers add a skeletal structure, virtual muscle systems, facial expressions, and do all the things that can turn a dead, still character into something full of life. Once a creature is fully rigged, it is passed to the animation department.

The Animation department takes the marionette created by the riggers and brings it to life. They move the characters to that they climb tall buildings, swing from trees or get eaten by monsters. In cases where the visual effects do not include creature work, the animators may be animating cars, planes, falling crystals, or anything else called for. A subset of animation, which is similar in function to matchmove, is called Matchimation. This is the process of animating a 3d model to match a real element in the plate. Perhaps this is so that we could add fire to someone's hand (like in Hellboy) or so that we could add water splashes and the water itself, reacting to a person on film.

While the animation is going on, the model is going through the process by which is transforms from a grey plastic dinosaur (using our dinosaur example) to a dinosaur that looks real and scares the pants off of kids. This process consists of Texturing and Shader work, also often called Surfacing. Artists paint the images which are wrapped onto the model, and give the surface the characteristics (color, reflectivity, sub-surface scattering) of the real surface. These images (called textures) are put together in the Shader. The shader mostly tells the computer how to interpret the textures and what to do with the surface. Together they can make the dinosaur look wet, or dry, bumpy or smooth, or make the light shine through the pterodactyl's wings.

At this stage, all the bits and pieces begin to come together. The model, with it's textures and shaders, gets the animation applied to it. The virtual camera from matchmove is brought in and the model viewed through it. The The Technical Directors (TDs) pull all the assets together and make sure everything is working properly. They then light the shot using virtual lights, in order to make it match the background plates. The shot is set up so that it can render in such a way that the next step (compositing) goes smoothly. Rendering is the creation of a final image based off of all the previous tasks. This usually means setting things up so that the elements of the shot render in what are called 'passes'. This mean that for our dinosaur, one pass may be the dinosaur itself, while another pass may be the shadow the dinosaur is casting on the ground. Another pass may be the reflections of the dinosaur in nearby puddles or perhaps a black and white matte pass that can be used later for color correction of a small piece of the dinosaur.

These passes are sent off to the render farm by the TDs. The render farm is just a fancy name for a bunch of computers in a room. These computers are usually nothing more then some processors and memory, and their only task is to crunch the numbers that make a render. These computers are much thinner and are stacked up on racks to save space. Some are shaped sort of like a rectangular pizza box and others sort of like thin shoeboxes (nicknamed pizza boxes and blades respectively). Anyhoo, the render farm allows many frames to be rendered all at once. Often, on tv shows about visual effects, they will mention that such-and-such effect took a total of six years of render time to get rendered. One then thinks to one's self, "Well, that's silly, they didn't making this movie 6 years ago, so how did it get done?" The answer is render farms. If, for example, there were 100 machines in the render farm (not very many for most visual effects houses doing film work), and those do nothing but render 24 hours a day, 7 days a week that amounts to 16800 render hours per week (100 x 24 x 7). That amounts to 700 days of render time (just under 2 years) on a single machine, all fit into a week because of the render farm.

Finally, the renders are handed off to the Compositors, who combine them with the film plates. The compositors use the cleaned plates from the Paint dept, the roto mattes from the roto dept and the renders from the TDs and put them all together in their compositing package. They add their own tweaks, making colors match perfectly and adding things like haze and depth-of-field. They make sure that everything looks real and all the details are perfect and then they render out their images, which are shipped off, put on film and that is what you see in the theatre (more or less).

That is roughly how the visual effects process works. There are really quite a few more details to it and in some cases some steps are skipped or roles overlap. But overall, this is generally how it all goes.

A: The general workflow can actually vary quite a bit from place to place, mainly depending on the size and number of shots to be completed. Since I personally work at a medium-sized (150 or so people) house, that is only occasionally involved in pre-production, that is what this is based on. Note that some of the largest houses (such at ILM) often are involved very early in the film process with things like pre-visualization and concepting. This is written in the context of a house that mostly receives their assets after shooting has wrapped. With that said, on we go...

The fast answer:

Matchmove

Roto/Paint

Modeling

Rigging (if needed)

Animation

Texture/Shader Work

Lighting/Rendering

Effects

Compositing

The long answer:

The visual effects process begins with a number of ingredients. The first ingredient in the mix is the film or video that was shot. This usually consists of a series of still images (as opposed to movie formats like quicktime) and are referred to as 'plates' (as in film plates). These usually consist of a sequence of digital files that are the digital scans of the original film that was shot. These are the source images and, in theory, the only thing they are missing are the visual effects.

The next ingredient is the concept. This can consist of detailed descriptions, storyboards, concept paintings, and pre-visualization animations (rough animations and effects often done before the film is shot to illustrate what is going on). Alternatively, the concept can consist of no more then a lot of hand-waving by the director and some vague descriptions of how things should 'feel'. This varies pretty widely from show to show.

The final ingredients are technical data sorts of things. Things like camera information (lens info, camera positions, etc) to aid in the matchmove process (more on this later), chrome sphere or spherical image sequences (to aid lighting in 3d), set blueprints, props used on set, and any number of other things that can vary a bit show-to-show.

Stu Maschwitz (one of the founders of The Orphanage, where I work) often states that our job is to take the shots we are given, which are missing the dinosaurs, and give them back exactly as they were, but with dinosaurs included. With that in mind, there are some things that need to be done before we can go dropping our dinosaurs into the film plates.

The first step is called matchmove. This is the process by which the camera move (as in the camera the film was shot with) is tracked in 3d space. This process is aided greatly by the camera data mentioned above, tracking markers shot on the film, and measurements taken on set. The end result of this step is a sort of digital camera move that we can use in our 3d packages, which exactly matches the camera move that the film camera made while it was shooting the film. This is a pretty complicated process and if you'd like to learn more, you should check out Tim Dobbert's book, Matchmoving: The Invisible Art of Camera Tracking, as it is the best matchmoving book out there.

Often, while matchmove is working on their side, the Roto and Paint departments are hard at work cleaning and fixing the plates. The Roto (short for rotoscoping) department does the stuff that allows for the dinosaurs to go behind the plants in the plate or allows us to take the hero of the story (who was shot over green or blue screen) and place them into a whole new environment. The folks in roto do what is called green- or blue-screen 'extractions', the end result of which is a plate with an empty hole (think of a painting on glass, where you see the painting in some areas, but can see through the glass in others) where the green or blue was. In cases where shooting over blue or green was not possible, or where the green or blue was too muddled, the roto folks must do it by hand. This consists of a complicated tracing of the edges of the things to be separated. This tracing, though, also must take into account things like motion blur, camera depth of field, and in very difficult cases, things like hair, where much of the work must be done by hand by a skilled roto artist in order to avoid a strange halo around the hair later.

The Paint department, as mentioned above, is also working to fix and clean up the film plates. Paint work can consist of something as simple as painting out specs of dust on the film (from the scanning process, resulting is white flecks that flash on a single frame), to as complicated as removing whole people/cars/buildings from the shot and painstakingly replacing everything that was behind it. More often though, the day-to-day work of the paint dept is to do things like wire removal (painting out the wires that were holding up and actor or actress), and removal of little mistakes (like a crew member who thought they were hidden behind a set piece, but there hat is actually sticking up in view).

While the above things are going on, the 3d assets (virtual buildings, monsters, environments, etc) are being modeled. This just consists of a sort of virtual sculpting in the computer which results in a virtual representation of an object. There are quite a few ways to go about modeling, each with it's benefits and drawbacks, but the end result is generally about the same: a model to be passed on to the next step in the process.

If the model happens to be a creature/character of some sort, the next step in the process is called Rigging. This is where the stuffed animal (something that has so structure besides the outer skin) gets turned into a marionette (with a skeleton, strings, etc). The riggers add a skeletal structure, virtual muscle systems, facial expressions, and do all the things that can turn a dead, still character into something full of life. Once a creature is fully rigged, it is passed to the animation department.

The Animation department takes the marionette created by the riggers and brings it to life. They move the characters to that they climb tall buildings, swing from trees or get eaten by monsters. In cases where the visual effects do not include creature work, the animators may be animating cars, planes, falling crystals, or anything else called for. A subset of animation, which is similar in function to matchmove, is called Matchimation. This is the process of animating a 3d model to match a real element in the plate. Perhaps this is so that we could add fire to someone's hand (like in Hellboy) or so that we could add water splashes and the water itself, reacting to a person on film.

While the animation is going on, the model is going through the process by which is transforms from a grey plastic dinosaur (using our dinosaur example) to a dinosaur that looks real and scares the pants off of kids. This process consists of Texturing and Shader work, also often called Surfacing. Artists paint the images which are wrapped onto the model, and give the surface the characteristics (color, reflectivity, sub-surface scattering) of the real surface. These images (called textures) are put together in the Shader. The shader mostly tells the computer how to interpret the textures and what to do with the surface. Together they can make the dinosaur look wet, or dry, bumpy or smooth, or make the light shine through the pterodactyl's wings.

At this stage, all the bits and pieces begin to come together. The model, with it's textures and shaders, gets the animation applied to it. The virtual camera from matchmove is brought in and the model viewed through it. The The Technical Directors (TDs) pull all the assets together and make sure everything is working properly. They then light the shot using virtual lights, in order to make it match the background plates. The shot is set up so that it can render in such a way that the next step (compositing) goes smoothly. Rendering is the creation of a final image based off of all the previous tasks. This usually means setting things up so that the elements of the shot render in what are called 'passes'. This mean that for our dinosaur, one pass may be the dinosaur itself, while another pass may be the shadow the dinosaur is casting on the ground. Another pass may be the reflections of the dinosaur in nearby puddles or perhaps a black and white matte pass that can be used later for color correction of a small piece of the dinosaur.

These passes are sent off to the render farm by the TDs. The render farm is just a fancy name for a bunch of computers in a room. These computers are usually nothing more then some processors and memory, and their only task is to crunch the numbers that make a render. These computers are much thinner and are stacked up on racks to save space. Some are shaped sort of like a rectangular pizza box and others sort of like thin shoeboxes (nicknamed pizza boxes and blades respectively). Anyhoo, the render farm allows many frames to be rendered all at once. Often, on tv shows about visual effects, they will mention that such-and-such effect took a total of six years of render time to get rendered. One then thinks to one's self, "Well, that's silly, they didn't making this movie 6 years ago, so how did it get done?" The answer is render farms. If, for example, there were 100 machines in the render farm (not very many for most visual effects houses doing film work), and those do nothing but render 24 hours a day, 7 days a week that amounts to 16800 render hours per week (100 x 24 x 7). That amounts to 700 days of render time (just under 2 years) on a single machine, all fit into a week because of the render farm.

Finally, the renders are handed off to the Compositors, who combine them with the film plates. The compositors use the cleaned plates from the Paint dept, the roto mattes from the roto dept and the renders from the TDs and put them all together in their compositing package. They add their own tweaks, making colors match perfectly and adding things like haze and depth-of-field. They make sure that everything looks real and all the details are perfect and then they render out their images, which are shipped off, put on film and that is what you see in the theatre (more or less).

That is roughly how the visual effects process works. There are really quite a few more details to it and in some cases some steps are skipped or roles overlap. But overall, this is generally how it all goes.

Q: Are the effects that you do storyboarded before a shoot, and, if so, do you start working on them before you get the footage?

A: Often, yes, we get storyboards and concept art before the shooting has taken place. This allows us to do much of the development work before we recieve the film plates. In many cases, with complex effects, the actual method of how to complete the effect is not known from the beginning of the process. This may require a bit of research and development time, to decide which is the best approach, or to write the code needed to get the job done. This R&D work often takes places before the plates arrive.

Also, much of the organization and packaging of effects takes place before the plates arrive. In order to allow many artists to work on the same sort of effects across many shots (adding rain to lots of shots where it wasn't raining, for example), the effects, once developed, need to be packaged up by the Lead Artists and made ready for other artists to handle. This can be as simple as just saving a scene out and publishing it to the asset management system, or more complex, like building a rig to allow for ease of use of an effect by other artists.

A: Often, yes, we get storyboards and concept art before the shooting has taken place. This allows us to do much of the development work before we recieve the film plates. In many cases, with complex effects, the actual method of how to complete the effect is not known from the beginning of the process. This may require a bit of research and development time, to decide which is the best approach, or to write the code needed to get the job done. This R&D work often takes places before the plates arrive.

Also, much of the organization and packaging of effects takes place before the plates arrive. In order to allow many artists to work on the same sort of effects across many shots (adding rain to lots of shots where it wasn't raining, for example), the effects, once developed, need to be packaged up by the Lead Artists and made ready for other artists to handle. This can be as simple as just saving a scene out and publishing it to the asset management system, or more complex, like building a rig to allow for ease of use of an effect by other artists.

Q: Are the effects that you do storyboarded before a shoot, and, if so, do you start working on them before you get the footage?

A: Often, yes, we get storyboards and concept art before the shooting has taken place. This allows us to do much of the development work before we recieve the film plates. In many cases, with complex effects, the actual method of how to complete the effect is not known from the beginning of the process. This may require a bit of research and development time, to decide which is the best approach, or to write the code needed to get the job done. This R&D work often takes places before the plates arrive.

Also, much of the organization and packaging of effects takes place before the plates arrive. In order to allow many artists to work on the same sort of effects across many shots (adding rain to lots of shots where it wasn't raining, for example), the effects, once developed, need to be packaged up by the Lead Artists and made ready for other artists to handle. This can be as simple as just saving a scene out and publishing it to the asset management system, or more complex, like building a rig to allow for ease of use of an effect by other artists.

A: Often, yes, we get storyboards and concept art before the shooting has taken place. This allows us to do much of the development work before we recieve the film plates. In many cases, with complex effects, the actual method of how to complete the effect is not known from the beginning of the process. This may require a bit of research and development time, to decide which is the best approach, or to write the code needed to get the job done. This R&D work often takes places before the plates arrive.

Also, much of the organization and packaging of effects takes place before the plates arrive. In order to allow many artists to work on the same sort of effects across many shots (adding rain to lots of shots where it wasn't raining, for example), the effects, once developed, need to be packaged up by the Lead Artists and made ready for other artists to handle. This can be as simple as just saving a scene out and publishing it to the asset management system, or more complex, like building a rig to allow for ease of use of an effect by other artists.

Q: What image formats are used during the vfx process, including the source images, working formats and final delivery?

A: Generally the scanned film plates are either Cineon or DPX (a variant of cineon) formatted files. These are 10-bit images in log color space, which allows the 10 bits worth of information per channel to be more efficiently used by the file format. The 10 bits amounts to 1024 possible values per channel and 1073741824(ish) possible colors per pixel. That seems like quite a few, but in practice, that can be a bit limiting.

This results in different formats being used as the working formats during the effects process. The other formats are usually 16 bit or what is called floating point. The 16-bit formats have a possibility of 4096 values per channel, resulting in 68719476736 (lots) of possible colors. The thing about the higher bit depth formats is that they allow for colors that are brighter than white. These are things like reflections of very bright lights, the sun, etc. While working on the effects, having this brighter-than-white data can help quite a bit, as it allows the compositors to use it to control other effects, scale it down into the visible range, or other comp-mojo like that. Examples of 16-bit formats include .png, .tif and .sgi (all of which have 8-bit variants as well).

Even better than 16-bit is 'floating point' which amounts to 32-bits per channel and way more colors than one can shake a stick at. It allows for overbrights (values greater then white) and is the render bit-depth of choice. The most commonly used format that can support floating point is the Open EXR format. EXR was developed originally by ILM for their own use, but they were nice enough to make it an open format, and it is now used most everywhere (as far as I know).

The final delivery format is often either Cineon or DPX.

A: Generally the scanned film plates are either Cineon or DPX (a variant of cineon) formatted files. These are 10-bit images in log color space, which allows the 10 bits worth of information per channel to be more efficiently used by the file format. The 10 bits amounts to 1024 possible values per channel and 1073741824(ish) possible colors per pixel. That seems like quite a few, but in practice, that can be a bit limiting.

This results in different formats being used as the working formats during the effects process. The other formats are usually 16 bit or what is called floating point. The 16-bit formats have a possibility of 4096 values per channel, resulting in 68719476736 (lots) of possible colors. The thing about the higher bit depth formats is that they allow for colors that are brighter than white. These are things like reflections of very bright lights, the sun, etc. While working on the effects, having this brighter-than-white data can help quite a bit, as it allows the compositors to use it to control other effects, scale it down into the visible range, or other comp-mojo like that. Examples of 16-bit formats include .png, .tif and .sgi (all of which have 8-bit variants as well).

Even better than 16-bit is 'floating point' which amounts to 32-bits per channel and way more colors than one can shake a stick at. It allows for overbrights (values greater then white) and is the render bit-depth of choice. The most commonly used format that can support floating point is the Open EXR format. EXR was developed originally by ILM for their own use, but they were nice enough to make it an open format, and it is now used most everywhere (as far as I know).

The final delivery format is often either Cineon or DPX.

Q: Are all the computers networked at your job so people can work on the same effects?

A: Yes. As mentioned in the general description above, there are many steps and many people working on film visual effects. There are, of course, very small boutique houses that can do some film work with a small number of people, but even there the computers must be able to all talk to each other. Often, very little, if any at all, of the working data is kept on the computers that the artists are working on. Usually, the film plates, projects files, renders, etc are kept on a central server or servers, and accessed across the network by all the other computers. This allows multiple artists to work on the same shots, and also allows the render farm to see all the same files so things can be rendered without tying up the artists' workstations.

A: Yes. As mentioned in the general description above, there are many steps and many people working on film visual effects. There are, of course, very small boutique houses that can do some film work with a small number of people, but even there the computers must be able to all talk to each other. Often, very little, if any at all, of the working data is kept on the computers that the artists are working on. Usually, the film plates, projects files, renders, etc are kept on a central server or servers, and accessed across the network by all the other computers. This allows multiple artists to work on the same shots, and also allows the render farm to see all the same files so things can be rendered without tying up the artists' workstations.

Q: How does one go about learning to do what you do, did you learn as you went or learn before-hand?

A: One can do a great deal of the technical learning beforehand. On the other hand, there is no substitute for experience. There are a lot of situations in the visual effects (and really, any) industry, that you can't really train for. This is particularly true because of the rate at which technology is evolving. Often visual effects are moving at the forefront of computer technology, so there may be no one else that knows more about a certain technique then the people you are working with.

As a counter-point to this, the thing that it is best to learn beforehand, is how to be flexible, adaptable and good with people. Since thing only certainty is that things will change, being prepared for, and willing to accept, that change will put you in a good place. Also, since vfx crews are made up of a lot of people, learning to tolerate a wide variety of personality types can

A: One can do a great deal of the technical learning beforehand. On the other hand, there is no substitute for experience. There are a lot of situations in the visual effects (and really, any) industry, that you can't really train for. This is particularly true because of the rate at which technology is evolving. Often visual effects are moving at the forefront of computer technology, so there may be no one else that knows more about a certain technique then the people you are working with.

As a counter-point to this, the thing that it is best to learn beforehand, is how to be flexible, adaptable and good with people. Since thing only certainty is that things will change, being prepared for, and willing to accept, that change will put you in a good place. Also, since vfx crews are made up of a lot of people, learning to tolerate a wide variety of personality types can

Q: Does an effects house usually get hired for an entire film or just a portion of it.

A: That usually depends on the scope and schedule of the film. Many of the large summer blockbusters movies, with heaps of visual effects, will have a primary effects house and a few additional effects houses. The main house will do many of the main effects and be working on the show for the longest time, while the additional houses often pick up the less-difficult effects or the ones that were not originally anticipated and scheduled for.

On smaller films (with fewer effects), there will often only be a single effects house that does all the effects. Although the open and closing titles are often done separately from the visual effects. Some films, such as Sin City, are split evenly between multiple houses (Sin City has three houses are doing roughly equal amounts of work). This was because pretty much every single shot on Sin City had a visual effects component to it, so there was a very large amount of work to be done. It also had to do with the director's preferences for certain visual effects houses and his own method of working.

A: That usually depends on the scope and schedule of the film. Many of the large summer blockbusters movies, with heaps of visual effects, will have a primary effects house and a few additional effects houses. The main house will do many of the main effects and be working on the show for the longest time, while the additional houses often pick up the less-difficult effects or the ones that were not originally anticipated and scheduled for.

On smaller films (with fewer effects), there will often only be a single effects house that does all the effects. Although the open and closing titles are often done separately from the visual effects. Some films, such as Sin City, are split evenly between multiple houses (Sin City has three houses are doing roughly equal amounts of work). This was because pretty much every single shot on Sin City had a visual effects component to it, so there was a very large amount of work to be done. It also had to do with the director's preferences for certain visual effects houses and his own method of working.